Knowledge. The fact that you, dear reader, are probably a student (or an academic) at university means that it is something you hopefully deeply value – after all, scientific research at universities helped to advance knowledge quite a lot. Even though the accomplishments of the sciences are overwhelming, you might be wondering: What are its limits? Is there something that is impossible to know, something that will remain unknown forever?

Knowledge. The fact that you, dear reader, are probably a student (or an academic) at university means that it is something you hopefully deeply value – after all, scientific research at universities helped to advance knowledge quite a lot. Even though the accomplishments of the sciences are overwhelming, you might be wondering: What are its limits? Is there something that is impossible to know, something that will remain unknown forever?

If you are a philosophy oriented person, you might find the question of ‘whether there is anything that we cannot know’ ill-phrased. Shouldn’t the question be: Is there anything we can know? Personally, I think the difference pertains to what we mean by ‘knowing’. For example, do you really know that water is H2O? On the one hand, you could be dreaming, hallucinating, or be stuck in some kind of illusion – all of which could deceive you into thinking that water is H2O. Or maybe you’re just a character in a book and the author wants you to believe that water is H2O. If we consider those possibilities, then there is only little that we can know. On the other hand, we generally have little reason to doubt what we perceive with our senses, and so we can use them to determine whether there is reliable evidence for a specific claim. In this way, we know that water is H2O because all of the sources from chemistry and physics tell us that this is indeed the case. Both approaches are interesting to discuss, but since the former line of argumentation quite often doesn’t lead anywhere (yes, we could all be dreaming, but so what?), I’ll focus on the latter – which is also the one adopted by scientific researchers.

After accepting that humans generally have the capability of acquiring new knowledge, it makes sense to consider to what extent the world itself is intelligible. For any natural process, is it possible to find the underlying causes of it? For the most part, there are indeed causal relations we can discover, even if they first seem somewhat up to chance. For example, when throwing a die, you could theoretically find out how exactly you need to throw it in order to get a specific number. In other words, the die is deterministic: the number you get is purely determined by the conditions under which you throw the die. On the contrary, according to our current worldview in the physical sciences, there are also a few processes that are at least partially up to chance – they are indeterministic. For example, when measuring an electron, you will never be able to predict with certainty at which exact location in the atomic shell you will find it, irrespective of how much you know about the underlying conditions. Hence, indeterministic processes are in the realm of things we cannot know by definition, because if we could predict them based on previous knowledge, then they wouldn’t be up to chance. In physics, the big question remains to what extent chance influences different kinds of processes, but for now we’ll go with the assumption that indeterminism is only present in the quantum realm, while large-scale physical processes (such as throwing a die) are determined by factors that were there before, and are not up to chance.

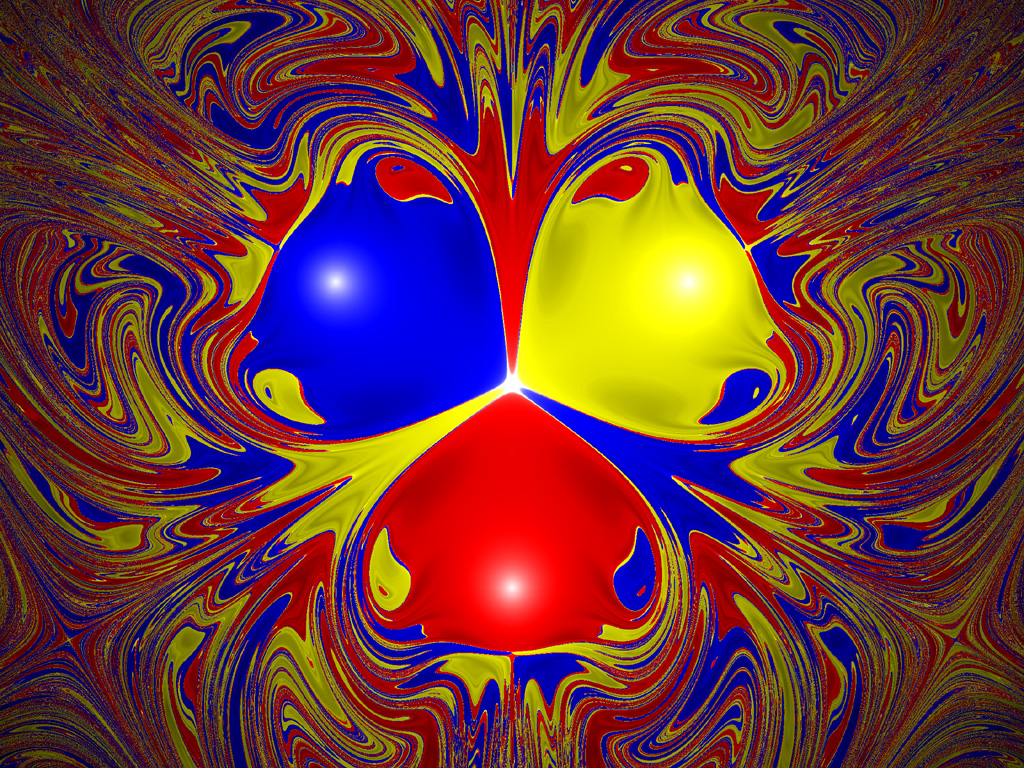

If we take the position of determinism, are there still things that cannot be known? After all, determinism entails that with the appropriate prior information we can know for sure how a specific system will behave in the future, so there should be no obstacle in principle. Unfortunately, it is not that easy, because it turns out that some systems require an indefinite amount of prior information in order to be fully predictable. If you try to model the behaviour of these systems, you will often find a fractal – a pattern that shows infinite complexity. For example, consider a pendulum that is attracted by three magnets on the ground. Depending on the position in which you let the pendulum go, it will end up at a different magnet. If we draw a small map using this information, it looks something like this:

Image Courtesy of Paul Nylander

In the picture above, the three white dots in the big blue, yellow, and red areas are where the three magnets are located, while the colour indicates at which magnet the pendulum ends up, if it is let loose at that specific position. So you can see that if you let the pendulum go e.g. at the small red area between the big blue and yellow areas (i.e. the second biggest red area in the picture), then neither the blue nor the yellow magnet will draw the pendulum towards it, but it will swing right towards the magnet in the big red area where the pendulum will finally end up. Yet, if you let the pendulum go somewhere where no colour is clearly dominating (e.g. somewhere in the corner), then it’s unclear at which magnet the pendulum will end up. The important point here is that those unclear areas (i.e. fractals) have unlimited complexity. No matter how much you zoom in on these parts of the map, the pattern will repeat itself and never vanishes. As a consequence, tiny deviations from the initial starting position of the pendulum can lead to entirely different outcomes. This is why it is almost impossible to know where the pendulum will end up in this case, even though the system is entirely deterministic (Du Sautoy, 2016).

You might be thinking that a pattern with infinite complexity is already difficult enough to study, but it obviously doesn’t stop there. In addition, the system you are trying to investigate could be an emergent property, i.e. it could be something that cannot simply be reduced to its parts. In other words, the system can have a distinctive quality that none of its constituents has, which makes it even harder to understand how this property exactly arises. Life is one such example. If you look at a living cell, you will find that none of its components are alive; and if you study each of its parts separately, you won’t get an answer to the question of how life emerges from its non-living elements (e.g. proteins, genes, etc.). It is only by taking into account the dynamic interaction between the elements in the complex system that one can begin to understand its emergent property (Mitchell, 2009).

“The mind is simply the next step on the ladder of complexity”

How do all of these insights fit into the study of the mind? Looking through a complexity science lens, psychology could be viewed as a direct extension of the natural sciences: the mind is simply the next step on the ladder of complexity, with regards to both fractals as well as the phenomenon of emergence. First, just like the magnetic pendulum creates a never-ending pattern that is impossible to discover completely, these kinds of fractal patterns can be found in the human brain as well, both in the brain’s form and in its function (Di Leva, 2016). Second, the human mind is something that cannot simply be reduced to its parts – it is emergent, just like other natural phenomena: Chemical reactions are emergent phenomena based on physical processes; life is an emergent phenomenon based on chemical processes; and in the same way our mind is an emergent phenomenon based on cells that are alive (Mitchell, 2009). In short, it seems psychology can benefit quite a lot from the advances complexity-oriented researchers have made in the natural sciences.

Why, then, do most psychological researchers rarely talk about this kind of research? Admittedly, there are several prominent neuroscientists who have already proposed ground-breaking theories that describe the human mind in terms as a complex, non-reducible system (e.g. Tononi & Edelman, 1998; Koch, 2004), but most everyday psychology research doesn’t take this perspective. If one delves deeper into the subject it’s also not hard to see why. Taking the complexity of the human brain into account is simply not feasible for most psychological topics, and so researchers have to take certain useful shortcuts. For example, it’s no surprise that measuring someone’s attitude is often done by just letting the person fill in a survey, rather than directly studying the neural mechanisms underlying this attitude. By using these shortcuts, we can still gain at least some knowledge about the incredibly complicated processes that are currently out of our reach – no matter whether they are about the human psyche, financial markets, or political systems. Yet, this doesn’t change the fact that at least acknowledging their inherent complexity can still give us a more profound understanding of these issues – even if this leads us to become more aware of the fact that the answers to some scientific questions might always stay unknown.

References

– Di Leva, A. (2016). The Fractal Geometry of the Brain. New York, US: Springer.

– Du Sautoy, M. (2016). What we cannot know. London, UK: Harper Collins.

– Mitchell, M. (2009). Complexity: A Guided Tour. Oxford, UK: Oxford University Press.

– Tononi, G. & Edelman, G. M. (1998). Consciousness and Complexity. Science, 282(5395), 1846-1851.

If you are a philosophy oriented person, you might find the question of ‘whether there is anything that we cannot know’ ill-phrased. Shouldn’t the question be: Is there anything we can know? Personally, I think the difference pertains to what we mean by ‘knowing’. For example, do you really know that water is H2O? On the one hand, you could be dreaming, hallucinating, or be stuck in some kind of illusion – all of which could deceive you into thinking that water is H2O. Or maybe you’re just a character in a book and the author wants you to believe that water is H2O. If we consider those possibilities, then there is only little that we can know. On the other hand, we generally have little reason to doubt what we perceive with our senses, and so we can use them to determine whether there is reliable evidence for a specific claim. In this way, we know that water is H2O because all of the sources from chemistry and physics tell us that this is indeed the case. Both approaches are interesting to discuss, but since the former line of argumentation quite often doesn’t lead anywhere (yes, we could all be dreaming, but so what?), I’ll focus on the latter – which is also the one adopted by scientific researchers.

After accepting that humans generally have the capability of acquiring new knowledge, it makes sense to consider to what extent the world itself is intelligible. For any natural process, is it possible to find the underlying causes of it? For the most part, there are indeed causal relations we can discover, even if they first seem somewhat up to chance. For example, when throwing a die, you could theoretically find out how exactly you need to throw it in order to get a specific number. In other words, the die is deterministic: the number you get is purely determined by the conditions under which you throw the die. On the contrary, according to our current worldview in the physical sciences, there are also a few processes that are at least partially up to chance – they are indeterministic. For example, when measuring an electron, you will never be able to predict with certainty at which exact location in the atomic shell you will find it, irrespective of how much you know about the underlying conditions. Hence, indeterministic processes are in the realm of things we cannot know by definition, because if we could predict them based on previous knowledge, then they wouldn’t be up to chance. In physics, the big question remains to what extent chance influences different kinds of processes, but for now we’ll go with the assumption that indeterminism is only present in the quantum realm, while large-scale physical processes (such as throwing a die) are determined by factors that were there before, and are not up to chance.

If we take the position of determinism, are there still things that cannot be known? After all, determinism entails that with the appropriate prior information we can know for sure how a specific system will behave in the future, so there should be no obstacle in principle. Unfortunately, it is not that easy, because it turns out that some systems require an indefinite amount of prior information in order to be fully predictable. If you try to model the behaviour of these systems, you will often find a fractal – a pattern that shows infinite complexity. For example, consider a pendulum that is attracted by three magnets on the ground. Depending on the position in which you let the pendulum go, it will end up at a different magnet. If we draw a small map using this information, it looks something like this:

Image Courtesy of Paul Nylander

In the picture above, the three white dots in the big blue, yellow, and red areas are where the three magnets are located, while the colour indicates at which magnet the pendulum ends up, if it is let loose at that specific position. So you can see that if you let the pendulum go e.g. at the small red area between the big blue and yellow areas (i.e. the second biggest red area in the picture), then neither the blue nor the yellow magnet will draw the pendulum towards it, but it will swing right towards the magnet in the big red area where the pendulum will finally end up. Yet, if you let the pendulum go somewhere where no colour is clearly dominating (e.g. somewhere in the corner), then it’s unclear at which magnet the pendulum will end up. The important point here is that those unclear areas (i.e. fractals) have unlimited complexity. No matter how much you zoom in on these parts of the map, the pattern will repeat itself and never vanishes. As a consequence, tiny deviations from the initial starting position of the pendulum can lead to entirely different outcomes. This is why it is almost impossible to know where the pendulum will end up in this case, even though the system is entirely deterministic (Du Sautoy, 2016).

You might be thinking that a pattern with infinite complexity is already difficult enough to study, but it obviously doesn’t stop there. In addition, the system you are trying to investigate could be an emergent property, i.e. it could be something that cannot simply be reduced to its parts. In other words, the system can have a distinctive quality that none of its constituents has, which makes it even harder to understand how this property exactly arises. Life is one such example. If you look at a living cell, you will find that none of its components are alive; and if you study each of its parts separately, you won’t get an answer to the question of how life emerges from its non-living elements (e.g. proteins, genes, etc.). It is only by taking into account the dynamic interaction between the elements in the complex system that one can begin to understand its emergent property (Mitchell, 2009).

“The mind is simply the next step on the ladder of complexity”

How do all of these insights fit into the study of the mind? Looking through a complexity science lens, psychology could be viewed as a direct extension of the natural sciences: the mind is simply the next step on the ladder of complexity, with regards to both fractals as well as the phenomenon of emergence. First, just like the magnetic pendulum creates a never-ending pattern that is impossible to discover completely, these kinds of fractal patterns can be found in the human brain as well, both in the brain’s form and in its function (Di Leva, 2016). Second, the human mind is something that cannot simply be reduced to its parts – it is emergent, just like other natural phenomena: Chemical reactions are emergent phenomena based on physical processes; life is an emergent phenomenon based on chemical processes; and in the same way our mind is an emergent phenomenon based on cells that are alive (Mitchell, 2009). In short, it seems psychology can benefit quite a lot from the advances complexity-oriented researchers have made in the natural sciences.

Why, then, do most psychological researchers rarely talk about this kind of research? Admittedly, there are several prominent neuroscientists who have already proposed ground-breaking theories that describe the human mind in terms as a complex, non-reducible system (e.g. Tononi & Edelman, 1998; Koch, 2004), but most everyday psychology research doesn’t take this perspective. If one delves deeper into the subject it’s also not hard to see why. Taking the complexity of the human brain into account is simply not feasible for most psychological topics, and so researchers have to take certain useful shortcuts. For example, it’s no surprise that measuring someone’s attitude is often done by just letting the person fill in a survey, rather than directly studying the neural mechanisms underlying this attitude. By using these shortcuts, we can still gain at least some knowledge about the incredibly complicated processes that are currently out of our reach – no matter whether they are about the human psyche, financial markets, or political systems. Yet, this doesn’t change the fact that at least acknowledging their inherent complexity can still give us a more profound understanding of these issues – even if this leads us to become more aware of the fact that the answers to some scientific questions might always stay unknown.